December 7, 2018

Is five users really enough for your usability tests?

In a seminal article published in the 2000, Why You Only Need to Test with 5 Users, Jakob Nielsen explained why you only need to survey the opinions of five people in order to carry out user tests. Is this magic number still relevant and applicable? In this article, I propose a reappraisal of the mathematical formula the co-founder of the Nielsen Norman Group (NNG) used to obtain this result.

Summarized in a single sentence: the formula proposed by Jakob Nielson can also be used to show that you will obtain a more comprehensive vision of UX-related problems with 30 remote users than with five locally present users.

Small tests, but in large numbers!

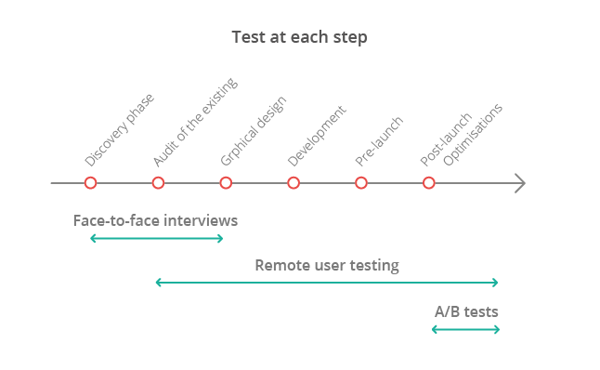

The Nielsen Norman Group continues to drive home the point that though five people is certainly sufficient, it's absolutely essential to carry out repeated tests. One of the leading arguments in support of this relates to the fact that your project is a living entity. You therefore need to test it at different stages of its development. It's also important to state that though it's the tests and research you carry out that enable you to identify the barriers and obstacles, the solutions you apply also need to be validated in their own right. You therefore need to go through repeated stages of listening to feedback until you've eliminated as many of the doubts and as much of the uncertainty as possible.

One principal we've adopted as our own here at Ferpection is that of dividing the project up into several large stages:

This imperative of conducting repeated tests is one of the three hypotheses underpinning the model proposed in the article cited above. Over the course of the rest of this article, we are going to take a look at two other hypotheses and challenge them on the basis of the incredible amount of development and evolution the web has undergone in the space of just a few years.

What the user-numbers formula really says

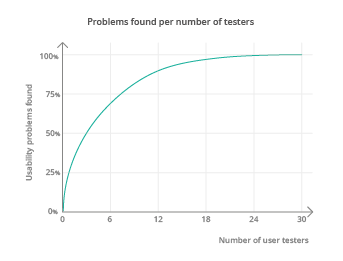

As the first stage in our reappraisal, let's begin by revisiting our classics. What exactly is this famous formula? Behind the article published on the NNG website there is, in fact, an academic publication from 1993: "A Mathematical Model of the Finding of Usability Problems". The authors of this paper are – surprise, surprise – Jakob Nielsen and Thomas K. Landauer. Using the results of several user tests covering software interfaces as a basis, they empirically show that the number of issues a research or testing exercise is capable of revealing follows a kind of asymptote pattern, or as it's more familiarly known, a Poisson distribution! The mathematical formula behind this distribution is well-known (warning: things are going to get a little technical, but don't worry, it will only be brief).

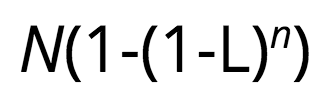

The formula enables us to calculate the number of UX problems based on the number (n) of individuals surveyed:

Where:

-

N is the total number of problems in the interface,

-

L is the proportion of problems found by a single, unique individual.

We can use this to deduce the percentage of the totality of all the problems that the problems found by a given sample represent. The greater this percentage, the better the research/testing exercise will have performed at diagnosing the issues with the interface in question.

But why five users?

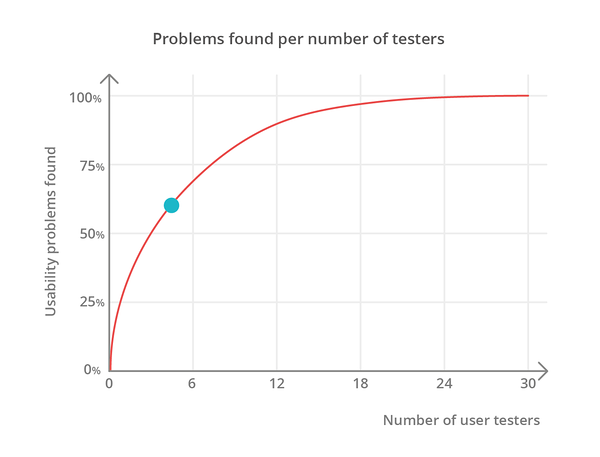

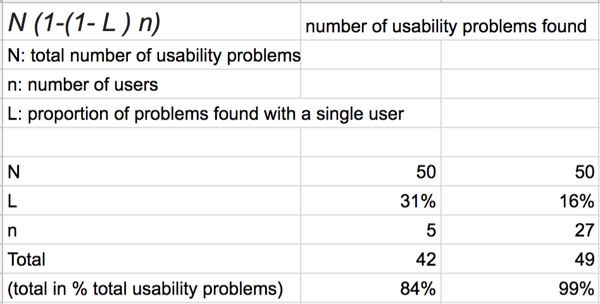

Survived as far as this point? Good, because things get simpler from here on in! In 2000 – so that's seven years later – Jakob Nielsen went on to carry out a simplification exercise and ended up concluding, in his now famous article, that five users are enough when it comes to listening to what your visitors have to say. More precisely, he showed, using the same formula, that with five users you will find 84% of the problems that exist with the UX for any given interface.

Why only 84% you might ask? Because of two particular hypotheses that are perhaps no longer as relevant as they were in 1993:

-

Working with his associate Thomas Landauer, Nielsen calculated the optimal ratio between the cost of carrying out a study and the number of interviewees, using a study budget of between $6,000 and $10,000 per five users as a basis. These days, thanks to the internet, this same budget would be enough to cover the cost of surveying the views and opinions of 30 users remotely. This cost/interviewees ratio therefore no longer makes any sense.

-

The two researchers also assumed that one individual would find 31% of all the interface-related UX problems for a piece of software running under an operating system and across a limited range of different screens. These days, once again, the extensive variety and diversity of screens and systems available is increasing the amounts of problems that are specific only to particular micro-segments of users. The 31% ratio is therefore an overestimate. In graphical terms, this means that the curve is too high. With a more realistic hypothesis of 16% (see below), only 58% of the problems would be found (represented on the graph by the blue round).

So how many users should you survey, if not five?

We are going to revisit the formula used to work out the ideal number of users, bringing the underlying hypotheses up-to-date as we do so:

-

We need to set a potential maximum for the percentage of problems found. Because our curve follows an asymptote type pattern, we cannot expect to achieve 100% and must instead content ourselves with finding 99% of the problems.

-

We need to divide the percentage of problems found by a single individual by two (i.e. 15.5% instead of 31%) due to the increased variety and diversity of screens, and because we will assume that the users will be carrying out post-moderated remote tests. These kinds of tests have a reputation for being less thorough, even though this has not been scientifically proven. This research paper on this very topic demonstrates that a remote usability test is capable of revealing virtually 100% of the critical problems identified by a trained quality evaluator on the same test.

On this basis, the ideal number of users is 27. The following table contains the formula together with the hypotheses used by Jakob Nielsen in the second column and the reappraised hypotheses in the third column:

From 5 to 27 users, and finally to 30!

We are reaching the end of our reappraisal exercise. Though 27 people is sufficient, there are two good reasons to round this figure up to 30:

-

The firstly is purely psychological in nature. As with the optimal figure Thomas Landauer originally came up, which was actually four rather than five, it seems simpler and easier to talk in terms of a sample of 30 individuals, even if there is no actual mathematical basis for this.

-

The second reason relates to the statistical use of the results obtained. With fewer than 30 individuals, normal distribution no longer applies. Normal distribution, however, enables us to carry out frequency analysis and calculate means/averages, which thus makes it possible for us to evaluate each of the problems revealed by the users in terms of its seriousness and significance. Below this number, any calculation will need to be taken with a pinch of salt, as we will be working with what is known as a weak or nonparametric sample size. In particular, this means that anyone performing calculations on a sample of five or even 10 individuals is taking huge risks where interpreting the results is concerned.

Okay, but isn't five users sometimes enough?

Absolutely, because identifying 84% of the issues and problems encountered by your customers can turn out to be a perfectly respectable outcome. This is especially the case where specific targeting results in recruitment costs that are closer to those observed by Nielsen and Landauer in 1993. Speaking in more specific terms, here at Ferpection we have introduced two intermediary sample sizes:

-

Between 5 and 10 users for bespoke targeting purposes, such as in cases involving specific roles within companies or the customers of relatively specialized brands. In scenarios such as these, we remain close to the efficiency ratios provided by the Nielsen Norman Group.

-

Between 15 and 20 users where targets are recruited using very specific targeting criteria, such as a specific type of online purchase or the long-term use of a particular application, etc. For the same cost, therefore, we will find between 92% and 97% of user-experience related problems and issues.

And what if I were to double the sample size to 60 users?

With 60 users, you inevitably also double the amount of resources committed – in terms of both time and money – just to achieve an increase of 0.9% in the number of problems found. It's therefore for you to decide whether it's worth it or not.

In conclusion, and with the context having changed and evolved since 1993 (and where targeting permits it), it now makes sense to replace samples of five locally-present users with samples of 30 remote users if you want to be able to confidently identify and prioritize the totality of the problems that exist with a UX.

All articles from the category: User research user testing | RSS